Generative AI is everywhere; it’s feels like the most quickly adopted technology in recent memory. Although it’s easy to use tools like ChatGPT and DALL-E online, I also wanted to play around with the technology myself, so I set a goal of configuring a private chatbot. What does “private” mean in this case? Well, the […]

Tag: AI

Depending on who you ask, ChatGPT (and other similar LLM chatbots) might be impressive, dangerous, misunderstood, or one of many other things. However, it and other recent generative AI have created plenty of entertaining content. Crossing over my interests between tabletop RPGs and computing, I read an article about playing D&D with ChatGPT as the […]

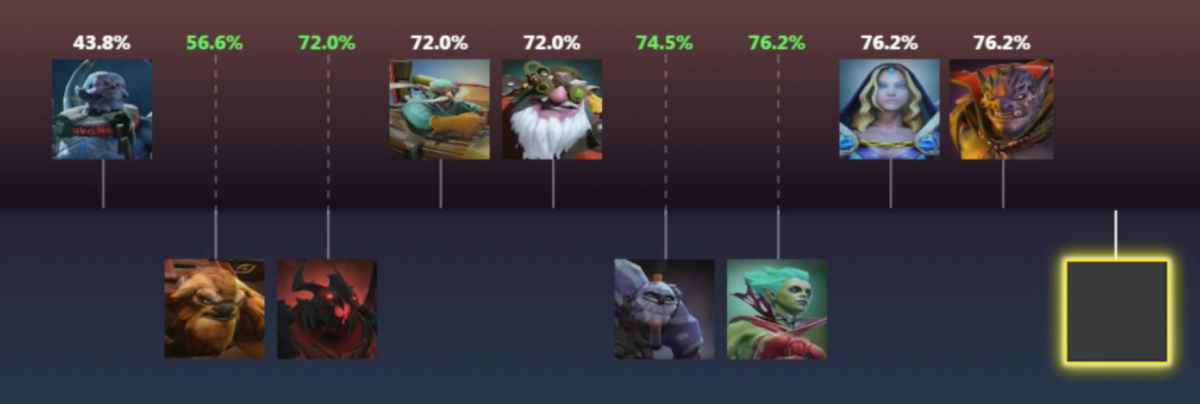

In 1996, Deep Blue played competitive Chess against world champion Garry Kasparov. In 2011, Watson beat Ken Jennings at Jeopardy. In 2016, AlphaGo played and beat Lee Sedol in Go. And in 2018, OpenAI Five played 2 games against professional Data 2 players, and the humans survived 2 very fun games. But in another year, I think […]

Last Thursday, I went to a panel discussion being held at the Stanford Law School by The Center for Internet and Society on “Legal Perspectives in Artificial Intelligence.” My mind is mostly buried in the AI, but since I have recently become more interested in policy in general and the social impact of technology, I thought […]

A Few Thoughts on Watson on Jeopardy

Earlier this week, Watson, an artificial intelligence program developed by IBM, competed against Ken Jennings and Brad Rutter on Jeopardy!. Seeing as this was the closest thing to my nerd roots being in pop culture, I avidly watched all 3 days of games, the NOVA episode on it, and attended a viewing party on-campus hosted […]